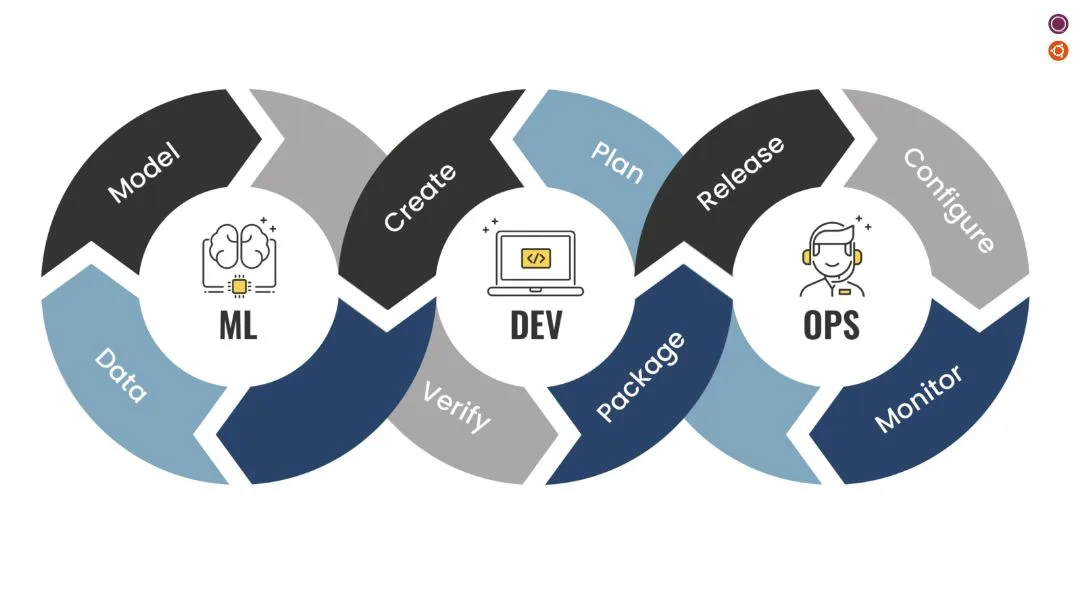

MLOps, short for Machine Learning Operations, is a set of practices and principles that aim to streamline and operationalize the end-to-end machine learning (ML) lifecycle. It involves the integration of machine learning systems into the broader IT and business infrastructure, addressing challenges related to deploying, monitoring, and maintaining machine learning models in production environments. The primary objectives of MLOps include:

Collaboration:

Facilitating collaboration between data scientists, data engineers, and operations teams to ensure seamless communication and cooperation throughout the ML lifecycle.Automation:

Implementing automation in the ML workflow, including tasks such as model training, testing, deployment, and monitoring, to improve efficiency and reduce manual effort.Version Control:

Applying version control practices to manage and track changes to machine learning models, datasets, and associated code, promoting reproducibility.Continuous Integration/Continuous Deployment (CI/CD):

Utilizing CI/CD pipelines to automate the testing, validation, and deployment of machine learning models, enabling rapid and reliable model deployment. Monitoring and Logging: Establishing robust monitoring and logging systems to track the performance of machine learning models in real-time, detect anomalies, and ensure model health.Scalability:

Designing machine learning systems to scale effectively, both in terms of handling larger datasets and accommodating increased workloads.Infrastructure as Code (IaC):

Treating infrastructure configuration as code, allowing for consistent and automated infrastructure provisioning, which is crucial for reproducibility.Model Governance and Compliance:

Implementing governance practices to manage the lifecycle of machine learning models, ensuring compliance with regulations, ethical guidelines, and organizational policies.Model Deployment and Orchestration:

Deploying machine learning models into production environments efficiently and orchestrating their execution, often leveraging containerization technologies like Docker and orchestration tools like Kubernetes.Model Registry:

Maintaining a centralized repository or registry for tracking different versions of machine learning models, facilitating model management and collaboration.Security:

Incorporating security measures to protect sensitive data, ensuring secure access to machine learning systems, and addressing potential vulnerabilities.